Creating a Class that performs all parts of Machine Learning automatically with Python

In this article we are going to design a Python class that contains functions that help us to work easily with the data performing the different phases of machine learning. All of this, we will demonstrate it by means of a dataframe of airplane prices.

Introduction:

A dataframe is a table-like structure that contains rows and columns, where each row represents a sample and each column represents a feature.

Working with dataframes is an essential part of the machine learning process because it allows us to manipulate and analyze our data in a structured and organized way. By organizing and preprocessing our data in a dataframe, we can make it easier to input into machine learning models and extract insights from the data. Dataframes are a powerful tool for working with machine learning data and can greatly facilitate the process of building and evaluating machine learning models.

Basic Machine Learning Phases:

The process of developing a machine learning model typically consists of four main phases: preprocessing, training, prediction, and evaluation.

- Preprocessing: This phase involves preparing the data for input into the machine learning model. This can include tasks such as cleaning the data, handling missing values, and normalizing or scaling the features. Preprocessing the data is important because it can help to improve the performance and accuracy of the model.

- Training: During the training phase, the machine learning model is fed the preprocessed data and uses it to learn patterns and relationships that will allow it to make predictions on new data.

- Prediction: Once the model has been trained, it can be used to make predictions on new, unseen data. This is known as the prediction phase. The model uses the patterns and relationships it learned during training to make predictions about the output for a given input.

- Evaluation: The final phase of the machine learning process is evaluation. During this phase, the model’s performance is assessed using a set of metrics, such as accuracy, rmse, mae or r2 Score. This helps to determine how well the model is able to make predictions on new data and identify any areas where it may need to be improved.

Overall, these four phases form the basis of the machine learning process and are essential for building and evaluating effective machine learning models.

Our Example:

We have dataframe of flight prices. We can obtine this data in the following link.

This dataframe include:

- Airline: The name of the airline operating the flight.

- Flight: Name of the plane.

- Source City: The city or airport where the flight departs from.

- Departure time: Morning, evening, afternoon, night or early morning.

- Stops: The stops that the plane do.

- Departure time: Morning, evening, afternoon, night or early morning.

- Source City: City or airport of flight arrival.

- Days Left: The days left before the plane leaves.

- Price: Price of the flight. We will try to predict this variable.

This Problem - Regression:

A regression problem is a type of machine learning problem in which you try to predict a continuous numerical value, rather than a discrete label. For example, you might try to predict the price of a house based on its characteristics (size, location, etc.), or the fuel consumption of a car based on its characteristics (engine size, weight, etc.).

In this case, we try to predict the price of the flight, wich is a numerical value. Except for some parts of what we will see below, the solution will be the same.

Code Jupyter Notebook:

You can find all the code explained in this Google Colabfile:

https://colab.research.google.com/drive/1wDaFqFyz3nPuiWQVbltRHztossNhUfTB?usp=sharing

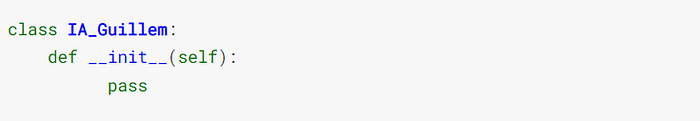

Designing the class:

In Python, a class is a template for creating objects. It defines the attributes (properties) and behaviors (methods) that an object of that class will have.

To define a class in Python, you use the class keyword, followed by the name of the class and a colon. Then, you define the attributes and behaviors of the class within the indented block of code below the class definition.

- Attributes are defined as variables within the class definition. They represent the properties of an object of that class.

- Behaviors are defined as functions within the class definition. They represent the actions that an object of that class can perform.

Once you have defined a class, you can create instances of that class, also known as objects. To do this, you use the name of the class followed by parentheses. You can then access the attributes and behaviors of an object using dot notation.

Methods:

In Python, a function defined within a class is known as a method. Methods are used to define the behavior of an object. They are called on an object, and can access and modify the data contained within the object.

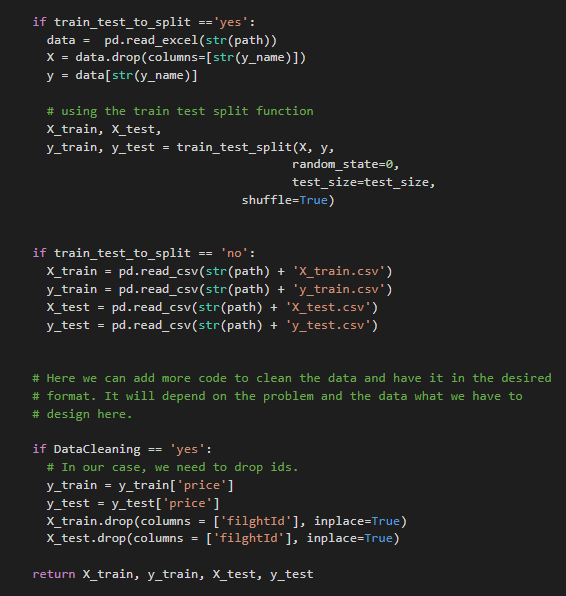

- Read

First of all, we define a Python method that reads the data. It has several inputs and we obtain X_train, X_test, y_train and y_test.

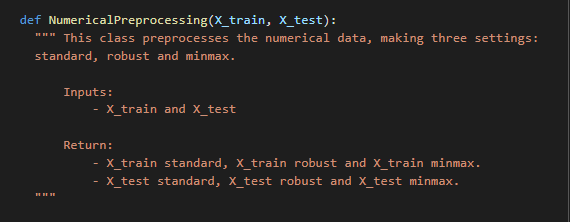

- Numerical Preprocessing:

In this method, given the input of train and test data, we perform several standardizations: The standard, the robust and the minmax standardization. We return as results the sets of X_train and X_test with each of these standarizations.

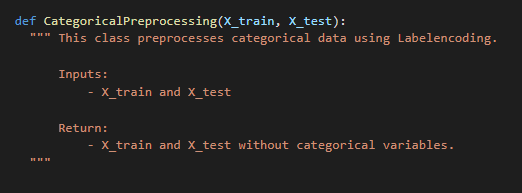

- Categorical Preprocessing:

This class preprocess categorical data using Label Encoder and return X_train and X_test without the categorical variables.

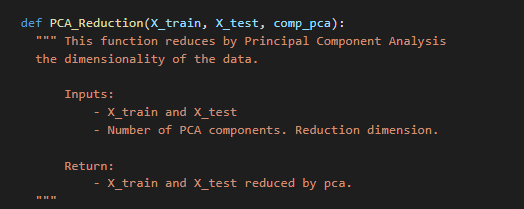

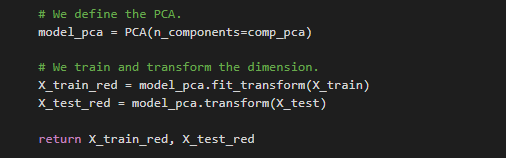

- PCA Reduction:

This method performs a PCA Reduction taking into account the number of reduction components to be requested.

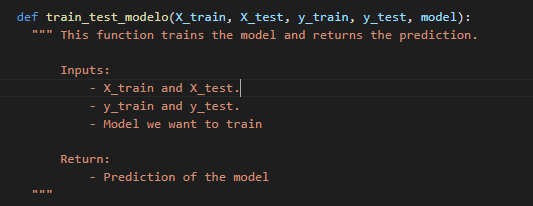

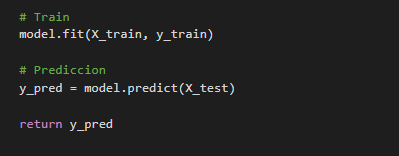

- Train/Test Modelo:

This method train the model and predict the values. Returns the prediction

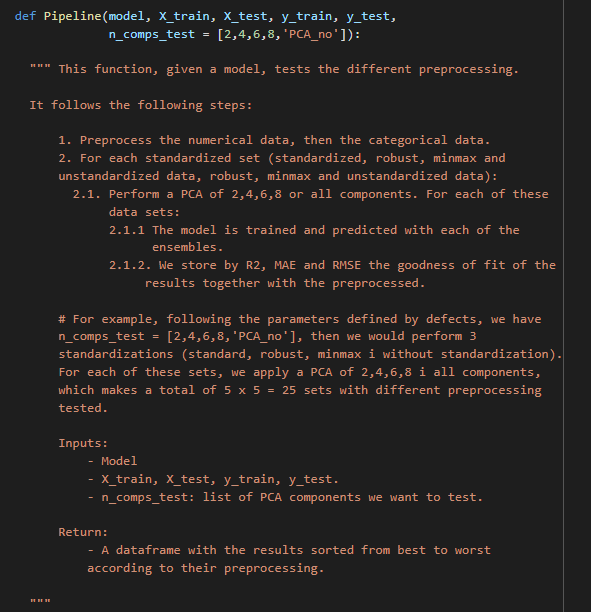

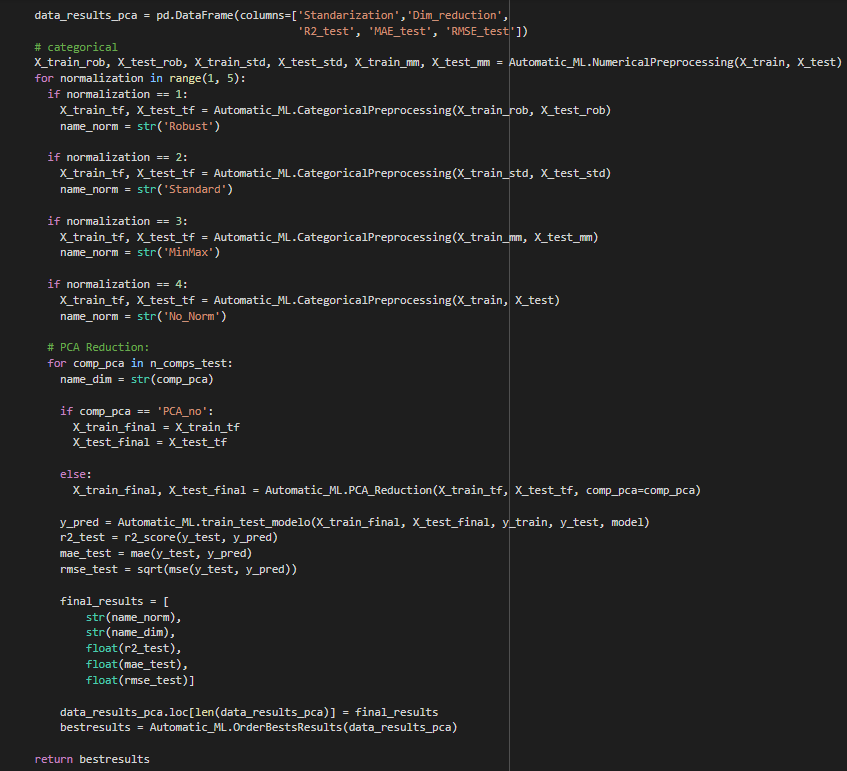

- Pipeline:

This pipline is used to test different preprocessed products. We test both different standardizations and non-standardized datops together with possible pca reductions.

Subsequently, we saved the R2, MAE and RMSE obtained for the test set of the different preprocessing tested. Then, we order the results according to the R2.

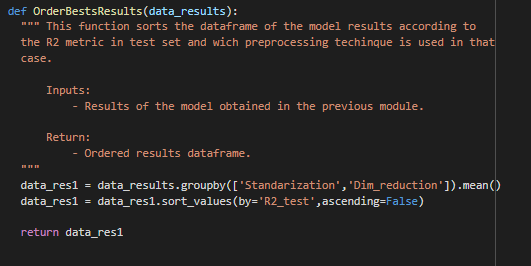

- Order Results:

Sort the dataframe by R2.

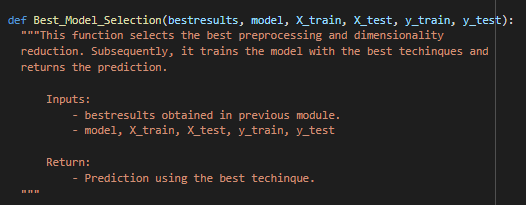

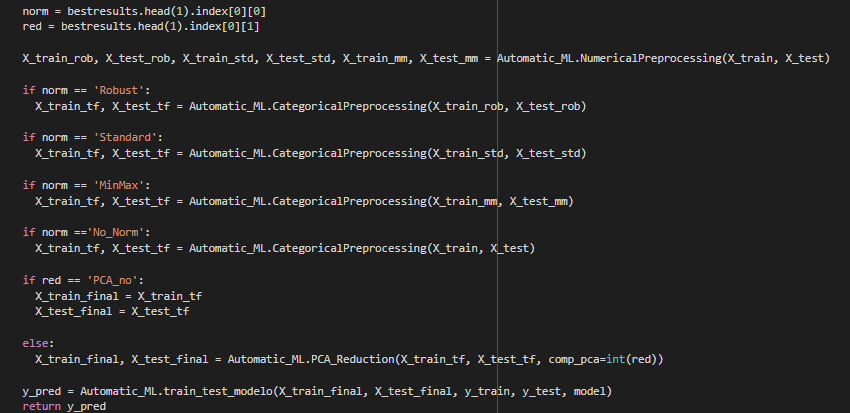

- Best Model Selection:

Given the results we have obtained with the different preprocessing methods, with this method, we are able to select the model that obtains the best performance. For example, it may be that the best preprocessing tested for a Random Forest is the standard normalization together with an 8-component PCA.

In this part, we select the best performing preprocessing, train the model and return the prediction it has made.

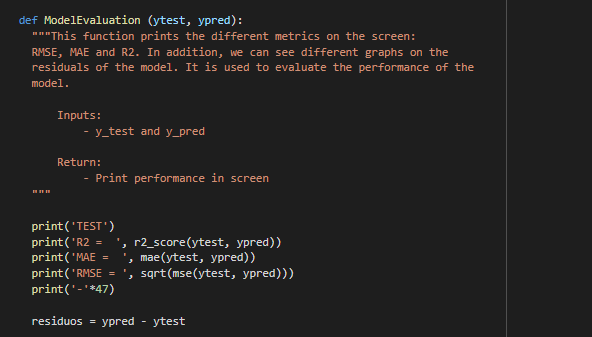

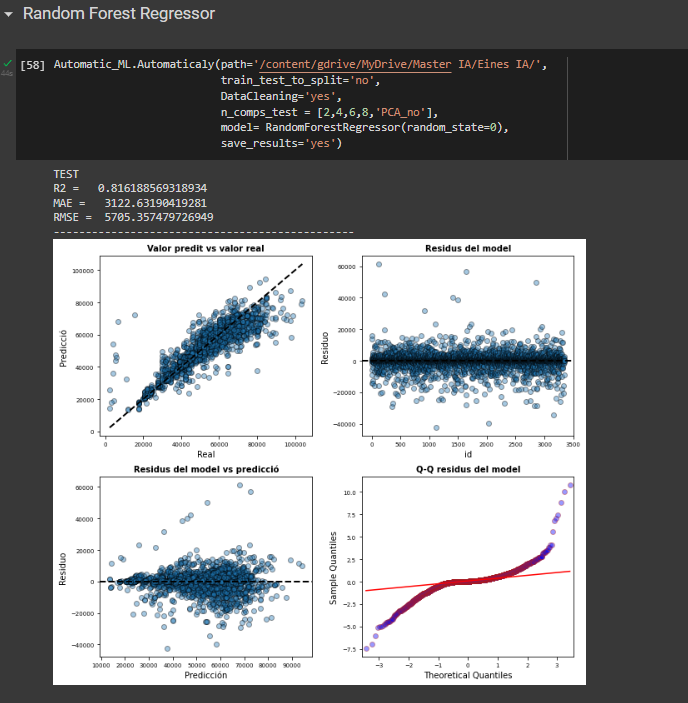

- Model Evaluation:

This method is used to evaluate the performance of the model.

First, it prints the R2, MAE and RMSE metrics.

On the other hand, it also graphs the residuals, which can help us to get a better understanding of its performance (In the image, the code is incomplete).

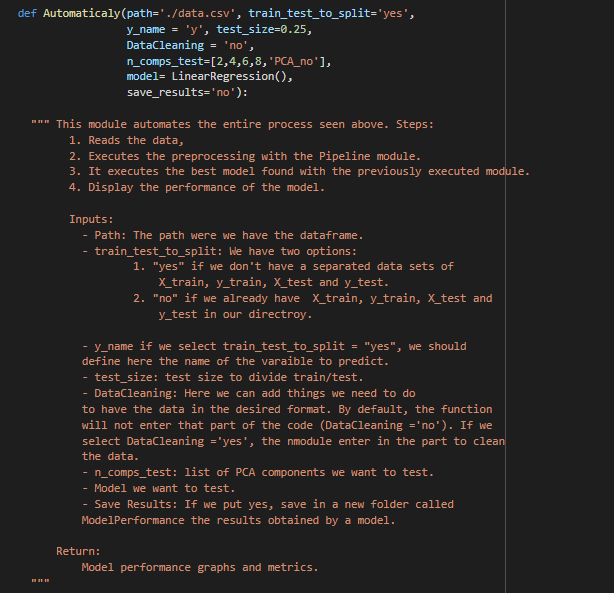

- Authomatic:

In this part, we execute all the phases that we have been telling you in this article.

1. Read the data,

2. Execute the preprocessing with the Pipeline module.

3. Execute the best model found with the previously executed module.

4. Visualizes the performance of the model.

FINAL:

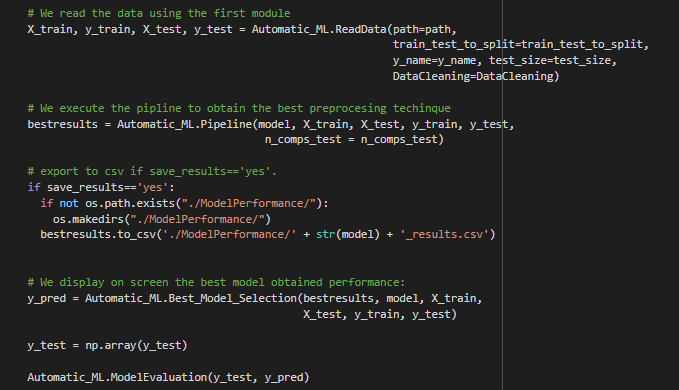

Let’s assume that we want to test the Random Forest.

As we can see, we have chosen the path where we have the data. As the data is already divided into x_train,x_test, y_train and y_test, we choose the parameter train_test_to_split=’no’. We activate the DataCleaning=’yes ‘. Here we can add the things we need to do to have the data in the desired format. By default, the function will not go into that part of the code. In our case, we need to remove the fligghtIDs from the data.

Subsequently, we will test a PCA of 2,4,6,8 and all components. This should be selected according to the problem to be solved. As we can see, we have a RandomForest as a model. As we put SaveResults==’yes’, a folder called ModelPerformance is created, this folder saves a .csv with the name of the model that we have tested, in this case RandomForest, with all the results of the different preprocessing.

So we can see that the preprocessing that has worked best for this Random Forest is robust standardization together with no dimensionality reduction.

Thanks for reading my article.

I would like to insist that you can see all the code in the following link: